Since its release, AutoGPT has captivated the minds of many with its goal-based autonomous execution and provides us a glimpse into what may be next on the journey towards AGI (artificial general intelligence). By combining LLMs (large language models) with long-term memory and self-critique, AutoGPT uses cutting-edge AI to break down high-level goals into smaller subtasks and utilizes tools (e.g. web search, code interpreters, API invocations) to fulfill them. However, to ensure optimal performance and mitigate potential risks, it is crucial to implement robust monitoring systems.

To help understand the implications of AutoGPT and how effective monitoring can be added to it, we're writing a two part series specifically on monitoring AutoGPTs and other autonomous agents. In this blog post, we will delve into the functionalities of AutoGPT and the reasons for monitoring.

Understanding AutoGPT

AutoGPT aims to carry out high-level abstract goals. For example, the default Entrepeneur-GPT configuration has the following goals:

- Increase net worth

- Grow Twitter Account

- Develop and manage multiple businesses autonomously

Instead of providing specific instructions, AutoGPT is capable of reasoning about high-level goals and planning/executing smaller tasks to move towards those goals. This level of independence and autonomy is a key distinction of AutoGPT from ChatGPT as it turns the AI into a more capable and general agent that can reason, plan, and learn from its errors.

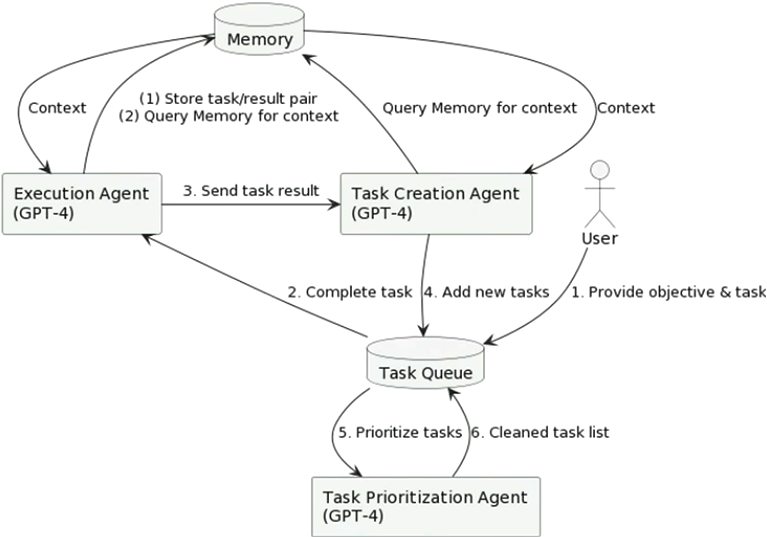

When it is run, AutoGPT enters an interaction loop where each iteration consists of a planning step followed by an execution step. This process can be visualized as a block diagram (originally presented by Andrej Karpathy at Microsoft Build 2023):

As a user, you only need to provide an objective (step 1) and the AI will autonomously carry out the remaining steps 2-6 repatedly until your goal is accomplished. Instead of providing detailed instructions, the AI is put on autopilot and acts autonomously to accomplish your desired goals.

The importance of monitoring

The previous section's description of autonomous AIs driving themselves to accomplish goals may make seem alarming, and justifiably so. Failures in AI systems have had real-world consequences ranging from chatbots threatening users to car crashes. At the same time, the underlying language model powering AutoGPT (OpenAI's GPT-4) is not cheap. In this section, we take a closer look at the potential risks and the importance of robust monitoring.

Safety

To empower AutoGPT to carry out useful tasks, it is endowed with a list of abilities ranging from making Google Searches to browsing websites to running Python files. These abilities introduce significant risks: data can be exfiltrated through web browsing HTTP GET requests, DDoS attacks can be orchestrated through API calling abilities, and running Python files risks remote code execution (RCE). A similar RCE vulnerability (CVE-2023-36258) has recently been documented in Langchain, another popular Python LLM library.

The developers of AutoGPT have recognized the potential safety risks arising from endowing autonomous AI with tools, and have made the following a part of AutoGPT's terms of use:

As an autonomous experiment, Auto-GPT may generate content or take actions that are not in line with real-world business practices or legal requirements. It is your responsibility to ensure that any actions or decisions made based on the output of this software comply with all applicable laws, regulations, and ethical standards. The developers and contributors of this project shall not be held responsible for any consequences arising from the use of this software.

This means that the responsibility of ensuring safe, ethical, and compliant use falls on you. And in order to detect that AutoGPT is behaving safely, you must first be able to monitor how it is behaving at all.

Costs

In addition to safety risks, AutoGPT also presents a financial risk to its operators. Another part of the terms of use states:

Please note that the use of the GPT-4 language model can be expensive due to its token usage. By utilizing this project, you acknowledge that you are responsible for monitoring and managing your own token usage and the associated costs. It is highly recommended to check your OpenAI API usage regularly and set up any necessary limits or alerts to prevent unexpected charges.

At the time of writing, GPT-4's pricing is set at $0.03-0.06 per 1000 tokens with a limit of 8192 tokens per request. This means that each request to the LLM can cost a minimum of $0.25 and up to $0.50. Given that AutoGPT performs multiple queries to LLMs in an unbounded interaction loop, your costs could quickly add up.

The disclaimer also suggests to regularly check your OpenAI API usage. While this is a good first step and OpenAI's usage reporting can give a good sense of aggregate use, it leaves much to be desired.

Usage is broken down by day, but all activity for a billing account is aggregated together and the contents of the prompt / completion incurring the token usage are unavailable. As a user of AutoGPT, in order to optimize costs and performance you need to about the costs of each individual LLM interaction.

Conclusions

We've opened AutoGPT's black box and saw how its abilities to interact with real world systems brings both significant capabilities as well as increased risks. In order for autonomous agents like AutoGPT to be utilized productively, safety and cost efficiency are mandatory requirements. Unfortunately, AutoGPT's developers waive all liability and recommend using OpenAI's built-in usage reporting. While it's a good first step, it does not enable inspecting and monitoring the costs, latencies, and contents of individual LLM interactions. In later blog posts, we will show how the Blueteam AI platform can be used to fill these gaps and help enable safe and cost-efficient operations of AGI agents like AutoGPT.

Want to jump ahead and get started with monitoring and securing your LLM applications? Book a demo at blueteam.ai to learn more.